We sacrifice control in the name of convenience. As we become like cyborgs, we should expect more control over our technology. Tech has long aimed to provide additional conveniences for modern living, with the idea that a gadget would take care of something for us. The premise is that our lives are made easier when we worry less about the small stuff, stepping aside to allow technology do the grunt work. But the more we step aside, the less involved we are, and the less we control our environment, our information, our lives. We are giving algorithms control over increasingly complex aspects of our lives.

The idea of using an algorithm to care for humans has received popular attention recently with the case of a driver who died when his Tesla Model S drove underneath a semi that was crossing his lane. The car was in autopilot mode, with assistive radar and cameras activated; the driver died when the top of his car was sheared off by the underside of the semi trailer. Now begins the blame-aversion game that will become increasingly common as automation takes over automobiles: The car maker says autopilot is an assist feature and that the fault lies with the driver. Consumer Reports says the name “autopilot” suggests autonomy and that the fault lies with the software system. The driver — the one person directly affected by the incident — cannot share his take on things.

Things.

We want our things to talk to other things, to be aware of other things, to protect us from threatening things. We trust our safety and well-being to plenty of devices: electronic access-control devices, home security systems, airbags and anti-lock brakes, to say nothing of pacemakers. When our things fail to protect us, do we fault ourselves for too much trust, the programmers for too little insight, or the corporations for too much hubris?

As our devices become increasingly integrated into our daily lives, the need for transparency in those devices’ functions increases, too. And with so many of our devices gaining the ability to communicate with other devices — be they thermostats in our homes or server farms in silicon valley — that transparency becomes both more critical and more difficult to suss out. Yet rather than falling prey to the seductive simplicity and convenience of these devices, we need to exert our ability to control our own environments. Otherwise, our environments will be crafted for us, without regard for our interests, our privacy, or our humanity.

A particular set of studies provided fascinating perspectives on how our environments craft our humanity. They sought to address whether human nature could be directly manipulated by external control. Jesse Stommel recently wrote about what we can learn by looking back on the famous Milgram experiments of the 1960s. Those experiments garnered more than their share of attention in the years since their initial publication. Myriad interpretations make it challenging to determine how best to interpret the findings — or to determine whether anything was actually “found” in the first place.

The Milgram experiments, in brief, set out to determine whether people would follow orders in the face of perceived authority when those orders caused perceived harm to another human. The oft-cited initial article reported that 65% of the study’s participants were willing to deliver a lethal electric shock to another person when instructed by a researcher. The most common takeaway from this study is this: Everyday people can, when following orders, do evil things. Indeed, Milgram’s experiments can be seen as a direct response to the trial of Adolf Eichmann, who argued that he was “just following orders” when operating on behalf of the Nazis during the Holocaust.

Alex Haslam, Professor of Psychology and ARC Laureate Fellow at Australia’s University of Queensland, offers another interpretation of the studies. In an episode of the Radiolab podcast, Haslam presents results from his analysis of Milgram’s work. Truth be told, I’ve not been able to verify his claims through other sources, but Haslam says the way to predict how likely the participant was to progress all the way to lethal levels is to see how the experimenter told the research participant to persist. These experiments were heavily scripted, so the experimenters had a limited selection of responses, or “prods” to use to encourage continued participation and stronger shocks. According to Haslam, the stronger the prod, the less likely a participant was to go along with the study, to the point where, once they were told they “have no other choice,” 0% of the participants would continue. According to Haslam, telling a person they have no say in a matter compels them toward complete disobedience. Considering how many classrooms have teacher-decreed “because I said so” rules and how many schools have “zero tolerance” discipline policies, Haslam’s assertion has dangerous consequences for student behavior. The more we restrict student choice and agency, the less willing they are to obey. Perhaps this connects with common complaints of students accused of being “unmotivated” — that term is often a euphemism for “noncompliant”.

Compliance with technology has become an issue outside the classroom, and the extent to which we give agency over to technology should concern us. What we consider part of our humanity has changed as electronic monitoring devices have become ubiquitous, affordable, smaller, and integrated with our communications tools. Our devices are a part of us — a condition that N. Katherine Hayles calls “embodied virtuality”. The nature of being human, Hayles tells us, “can be seamlessly articulated with intelligent machines” in our modern society. Our sense of self has become “an amalgam” of parts and pieces that combine to form “a material-informational entity”. Those familiar with the Star Trek television shows around the turn of the millennium will recall the Borg, an alien species that epitomizes the blend of the technological with the biological. The Borg represent the ultimate material-informational entity, and the shows that feature them use the cyborgs as a warning against what humans might become if we allow our technology to get too close or too embedded. Hayles herself discusses “the superiority of mobile (embodied) robots over computer programs, which have no capacity to move about and explore the environment” (202). Hayles wrote that in 1999, eight years before the introduction of the iPhone and more than a decade before our mobile devices could track our movements and measure the environment. We have since grown accustomed to an existence with embedded computers, essentially embodied because they are on us or with us at all times. We have grown so accustomed that we rarely question who designs or programs these devices. Who controls the computers on which our lives increasingly depend? To what end will the data we generate be put to use? Where is that data stored?

In his article, Jesse discusses the growing trend in education — especially with online, computer-driven, adaptive, or “personalized learning” systems — to use sensors and observational systems along with analytical algorithms to tailor instruction to the specific responses and performance of a specific student. There’s no human interaction at all, beyond the input of the programmers and the intentions of the algorithm creators. The software uses the hardware to manipulate the student. In Jesse’s words, “these things make education increasingly about obedience, not learning.” There’s the sense that students are made to be programmed, and that computers and algorithms can, once they understand us better, effectively program us. When we use technology explicitly to track student behavior, we tell students ‘you have no other choice.’ But what happens when our tools make the message more subtle and pervasive? What if our everyday reliance on myriad technological devices and systems wears down our ability to see opting out as a viable choice?

Enter the “Internet of Things”, with connected devices that gather, collect, and deliver data about the people using them. These devices monitor, track, and report on various aspects of users’ lives: where they parked their car, how many steps they took in a given day, how much they weigh each morning, the carbon dioxide content of their indoor air, the temperature of their living room, or even how fast their heart is beating. Tracking these things comes under the guise of convenience, tracking, or “knowing your body” — we can summon the car from a garage or parking spot, make a daily exercise routine, monitor our weight loss, improve our respiratory health, cool off a residence before coming home, or ensure that a workout hits cardio burn. On the other hand, these devices come with disclosures. Any information they gather has to be processed and stored somewhere. If our data can be accessed over the Internet, that means it’s stored on someone else’s computers. Now consider that someone else’s computer knows where your car sits (from the parking record), whether you’re walking or stationary right now (based on motion tracking), how fit you are (based on your BMI), whether your windows are open at home (based on the carbon dioxide levels), whether you’re home (using location services), and whether you’re calm (according to your heart rate). Suddenly the elements of convenience seem like deeply personal invasions. This Internet of Things extracts levels of trust that we wrap in the trappings of “convenience”.

Jesse finds “something ominous about the capital-I and capital-T of the acronym IoT, a kind of officiousness in the way these devices are described as proliferating across our social and physical landscapes.” For my part, I find something ominous in the use of the mundane word “things”. It’s such a common, general term that it can make us become paranoid or suspicious, looking around, wondering which “things” are connected, what those things track or measure, and to whom they send their observations. We aren’t, like Milgram’s participants, being observed by one team of researchers. We’re being observed by tollbooths and traffic cameras, toasters and coffee makers, wifi hotspots in big-box retailers, even our own wristwatches — and consequently all the companies that sell us their vision of that connected technology. The more our tech connects us, the more we must ask, “…to what?”

Now consider the tech that we have — or have been promised — for education. The LMS can track how long each student spends on each page or in each quiz, where they are when they access it, what hardware and software students use, and possibly how long students had the LMS in the foreground on their screens. Then there’s the facial-recognition software that, in the name of preventing cheating, uses the student’s webcam to match the student’s face to an image on file; some even track student eye movements to see how long they spend looking at the screen versus at other sources. All this tracking is done by one system and shared with the LMS, to be reported as “analytics”. That kind of sharing is worrisome because humans are nowhere in the connection; only our programmatic agents are. When tracking data is given from the sensor to the LMS and “other applications” to generate analytics, we’re now handing the care of real people over to an algorithm. We’re having machines do the work of caring for others. There’s no outside observer, watching and witnessing the potential for abuse, reminding us that these studies affect real people.

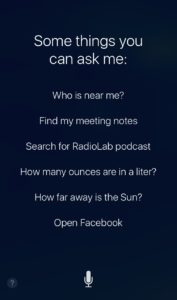

On the one hand, allowing our connected devices to talk to each other means we can stop sweating the small stuff. My air conditioner can cool my apartment before I get home because my phone knows where I am and can adjust the thermostat’s settings. I literally say to my phone “goodnight” (to turn off the lights and turn down the temperature) and “good morning” (to reverse the process) each day. I do this because I have bought into the ecosystem of a company, Apple, with a reputation for simplifying large technological problems, making them manageable for most people. In other words, the company’s software masks the complexity of a task. But rather than helping us understand the task, this kind of simplification helps us ignore the task and instead understand the device. I now communicate with my phone as a surrogate for adjusting the temperature and flipping light switches. Modern living now applies the same obedience principle too often seen in classrooms: Our devices now teach us not how to do things but rather how to comply with their interfaces. We are, as Seymour Papert warns, not programming the machines, but instead being programmed by them.

Notice what’s happened to human agency in my last paragraph and that image. I wrote about technical ability — what abilities my phone has, what actions it can take. I wrote about the way I talk to my device using phrases that are often reserved for the people we’re very close to: Saying “Good night” and “Good morning” from bed implies significant interpersonal intimacy. Yet I say those things to electronic equipment charging on my bedside table. In the image above, that same device presents the pronoun “me” as a self-referent, implying an identity, a persona. These theatrics can be beneficial — sometimes in endearing ways — but we should be watchful, aware of the limits of our own agency. My phone has been programmed to impersonate a human to convince me to interact with it. By suggesting a script for my questions, my phone attempts to program me.

This whole situation plays at the boundary of hybridity. We say a device has a personality. We say a person can be a tool. The human/device hybrid, the cyborg, becomes ever more common, more expected, more sought-after. We crave the devices and systems that keep us connected with one another. Social media gets its power by affording users the ability to connect with others. We must remember that, as Jesse put it, “the Internet is made of people, not things.” We must also remember that the things we use have the ability to control us or connect us. We need to know which is happening with each device we use. As educators, we must help our students learn to question how their devices, tools, technologies, apps, and games help connect them or control them; how those things collect and share their data; how their free apps and services turn them (or their data) into a commodity as a form of payment.

Pokémon GO and other augmented-reality games show how successful a careful balance of hybridity can be, bringing connected digital gameplay into the physical world on a massive scale. People use digital devices to interact with each other in physical spaces because of that game. Yet Pokémon GO is surely not without its faults — social, political, racial, economic, and technological — reminding us yet again of our imperative to question, to challenge, and to ensure awareness and agency. We need to focus on the potential for human connection in our technology, insisting that our tech bring us closer to other people, not deliver us into the clutches of an algorithm. It is up to us. We do have a choice.