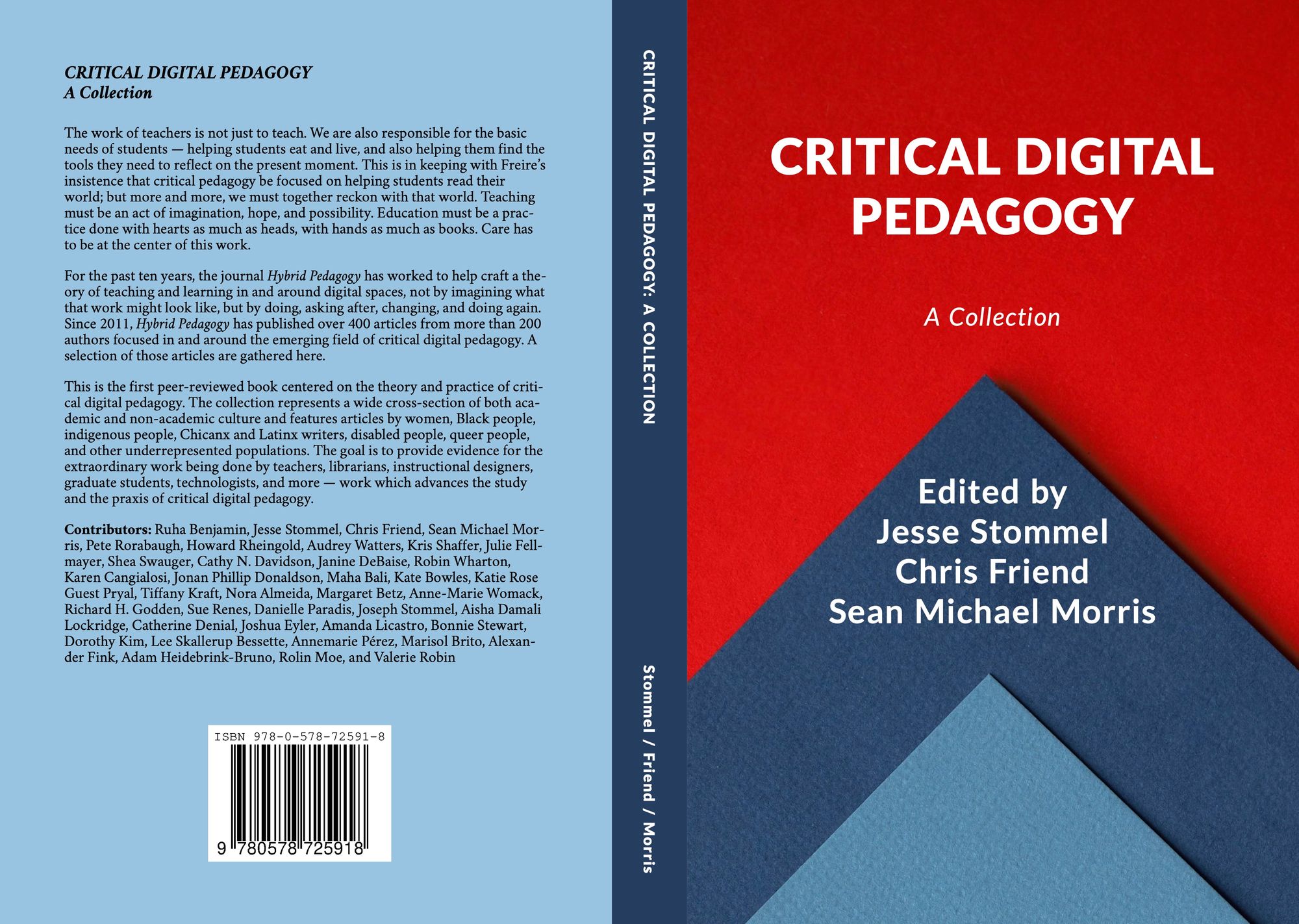

This piece was revised and expanded for a book from Hybrid Pedagogy, Critical Digital Pedagogy: A Collection, available now in paperback and Kindle editions.

Cheating is on the rise, we can’t trust students, and the best strategy to protect academic integrity is to invest in massive surveillance systems. At least, that’s the narrative that ed-tech companies catering to higher education are selling based on their products and marketing campaigns. One of the products that’s currently being adopted by colleges and universities is algorithmic test proctoring -- essentially software designed to automatically detect cheating in online tests -- but we haven’t had enough critical conversation about what values are embedded in these systems and the potential harm they can cause students. If I take a test using an algorithmic test proctor, it encodes my body as either normal or suspicious and my behaviors as safe or threatening. As a cisgender, able-bodied, neurotypical, white man, these technologies generally categorize my body as normal and safe, and because of this, they would not endanger my education, well-being, employment, or academic standing. The majority of the students on my campus don’t share my identities and could have a very different experience being read by test proctoring algorithms. We need to understand the potential ways that algorithmic test proctoring can discriminate against students based on their bodies and behaviors, why higher education is willing to endanger students in the first place, and what we can do about it.

What It Is and Why It's Here

Over the last fifteen years, higher education has been increasing the number of online courses and programs it offers. While the methods for cheating in online classes are often the same as those used in-person, the institutional fear of increased cheating from online students has encouraged a new and lucrative market for ed-tech companies. Common online cheating methods include using unauthorized information aids while taking a test and/or having someone besides the student enrolled in the class take the test on their behalf, practices that predate both the internet and online tests. While in-person test proctoring has been used to combat test-based cheating, this can be difficult to translate to online courses. Ed-tech companies have sought to address this concern by offering to watch students take online tests, in real time, through their webcams. If the outsourced test proctor sees any evidence of cheating, as defined by the company or the institution, they can flag the behavior to be reviewed later by the course owner. After tests are completed, a course owner will be made aware of any flagged behavior. It is ultimately up to the course owner, not the test proctor, to determine if flagged behavior is a violation of academic misconduct and, if so, how to address it. Some of the more prominent companies offering these services include Proctorio, Respondus, ProctorU, HonorLock, Kryterion Global Testing Solutions, and Examity.

Several companies including Proctorio, Respondus, ProctorU, and others have adapted the outsourced human-proctoring model to include algorithmic proctoring, sometimes called “automated proctoring.” Instead of a third party employee watching students take tests individually, tests are recorded, including audio and video of students, and run through internally-developed machine learning algorithms that “watch” each video and flag suspicious behavior in real time. Flagged sections of the test are sent to the course owner who, as before, determines if cheating was present or not. In order to do this, these algorithms require a large dataset to establish a baseline of “normal” bodies and behavior from which to make decisions. In this case, the data are recordings of people taking tests, exhibiting both cheating and non-cheating behavior, and the algorithm is taught by developers which bodies and behaviors are suspicious and which are “normal”. At some point, with enough data and modifications, these companies deem their algorithms to be able to accurately identify cheating. While it’s unclear exactly how many institutions are implementing algorithmic test proctoring, on the low end we can say there are at least tens of thousands of online tests every month proctored by third parties. From my conversations with representatives from Proctorio and ProctorU, in 2018 they administered about four million algorithmically proctored tests combined.

Potential Harms

Algorithmic test proctoring’s settings have discriminatory consequences across multiple identities and serious privacy implications. For example, certain test settings flag loud noises or leaving the view of the camera as suspicious. These settings will disproportionately impact women who typically take on the majority of childcare, breast feeding, lactation, and caretaking roles for their family. Students who are parents may not be able to afford childcare, be able to leave the house, or set aside quiet, uninterrupted blocks of time to take a test. Even though Title IX includes protections for pregnancy and parental status, default test settings like these classify the day-to-day logistics of caring for children and dependents as a threat to academic integrity.

Students with certain medical conditions such as neuromuscular disorders or spinal injuries that prohibit them from sitting for long periods of time, those who need to use the restroom frequently, or anyone who needs to administer medication during a test will be flagged. In order for a student to identify themselves at the beginning of a test, they have to hold their ID stationary in front of their computer’s camera and reverse-orient it to a frame on the screen, a task that requires fine motor skills that able-bodied students sometimes struggle with, and which students with certain disabilities may not be able to do. When eye-tracking is used, students with visual impairments such as blindness or nystagmus or students who identify as autistic or neuro-atypical may be flagged. Even common test-taking behaviors such as reading the question out loud, listening to music, or behaviors such as hyperactivity associated with ADHD can be flagged. While there can sometimes be accommodations for things like bathroom breaks, the fact is that most proctoring software’s default settings label any bodies or behaviors that don’t conform to the able-bodied, neurotypical ideal as a threat to academic integrity.

While racist technology calibrated for white skin isn’t new (everything from photography to soap dispensers do this), we see it deployed through face detection and facial recognition used by algorithmic proctoring systems. Students with black or brown skin have been asked to shine more light on themselves when verifying their identities for a test, a combination of both embedded computer video cameras and facial recognition being designed by and for white people. A Black student at my university reported being unable to use Proctorio because the system had trouble detecting their face, but could detect the faces of their white peers. While some test proctoring companies develop their own facial recognition software, most purchase software developed by other companies, but these technologies generally function similarly and have shown a consistent inability to identify people with darker skin or even tell the difference between Chinese people. Facial recognition literally encodes the invisibility of Black people and the racist stereotype that all Asian people look the same.

At the beginning of a test, these products ask students to verify their identity by matching their appearance with a photo ID. As Os Keyes has demonstrated, facial recognition has a terrible history with gender. This means that a software asking students to verify their identity is compromising for students who identify as trans, non-binary, or express their gender in ways counter to cis/heteronormativity. If a student’s gender expression or name on their ID are different from their current gender expression or name, the algorithm may flag them as suspicious. When this happens, they may have to undergo another level of scrutiny to authenticate their identity, an already common and traumatic experience for trans and gender non-conforming students. If these students are not alerted of this possibility before the test begins, it may force them to either discontinue the test and risk their grade, or out themselves to their course owner when they may not want to, risking more trauma and discrimination including being denied financial aid, being forced to leave their institution, or have their lives put in physical danger.

Course owners who use these products are given access to recorded video and audio of their students when they take tests, which can include the inside of students’ homes and bedrooms. A common feature of proctoring systems is to allow course owners to download the recordings of their students to keep on a local device, and course owners can view the recordings of their students as many times as they want, when and wherever they want. These features and settings create a system of asymmetric surveillance and lack of accountability, things which have always created a risk for abuse and sexual harassment. Technologies like these have a long history of being abused, largely by heterosexual men at the expense of women’s bodies, privacy, and dignity. For example, university professors have used texting and social media to stalk their students, TSA employees targeted women to scan their bodies and share the images, police helicopters recorded people naked and having sex, National Security Agency employees shared sexually explicit photos they intercepted and used wiretapping technology to spy on current or former lovers, civic employees used CCTV to watch women undress in their homes, and domestic abusers used IoT devices to gaslight wives and partners. These are just a few examples, but they represent how toxic masculinity has used technology to abuse women.

Additionally, proctoring systems often record the approximate location of where a student is when taking the test, which if not on campus is often in their homes. Having a course owner know where their students live can be dangerous for students, as is enabling course owners to have unaccountable access to video recordings of their students’ bodies and homes.

Why Are We Encoding Bodies?

Given that these products create so much potential harm to students, it raises the question of why universities license them. Even if the risks to students were acknowledged by higher education institutions -- and at present, they aren’t -- these companies are offering a product that resonates with several implicit values and practices of higher education that ultimately outweigh the risk to student safety: discriminatory exclusion, the pedagogy of punishment, technological solutionism, and the Eugenic Gaze.

Discriminatory Exclusion

Anytime people from a non-dominant group seek to participate in education, predictable counter arguments emerge that rest on the belief that their inclusion would harm current students, academic standards, productivity, etc. Algorithmic proctoring companies capitalize on colleges’ and universities’ preexisting discriminatory fears by first stoking those fears and then selling products to alleviate them. Below is an excerpt from a Proctorio promotional video [Editor's note: this video was removed just after the publication of this article.]

Online education is moving the world’s students into the future at an alarming rate. With the ability to take learning outside the brick walls of our institutions, a vast number of people now have access to education. But this presents a problem of how to maintain academic integrity in a globally competitive job market. Instructors and students alike want to make sure they’re on a level playing field when it comes to academic achievements. Proctorio defends your accomplishments by holding dishonest people accountable, all the while protecting your privacy...

This promotional video plays upon the fear that if we include people not normally inside the “brick walls of our institutions” they will somehow threaten academic integrity. In short, we on the inside are honest, those on the outside are dishonest, and the rate at which “they” are joining “us” is cause for alarm. Proctorio is not an outlier in this; their messaging is representative of how most test proctoring companies market themselves to higher education.

Colleges and universities have a long history of this kind of exclusion. In 1956, a group called the Educational Fund of the Citizen’s Council began distributing pro-segregation propaganda claiming that if we allow Black students to attend white schools, Black students will lie, cheat, and generally cause disciplinary problems and the best response to them is increased disciplinary and policing tactics. In “The Case Against Coeducation: An Historical Perspective,” Carol K. Coburn outlines some of the biological inferiority arguments given to exclude women from men’s only institutions. When Cambridge University held a vote to determine if women should be granted equivalent degrees to men, male students protested by “burning effigies of female scholars and throwing fireworks into the windows of women’s colleges.” Higher education in the United States has feared including marginalized people from the beginning and test proctoring companies market directly to that fear. Their promotional messaging functions similarly to dog whistle politics which is commonly used in anti-immigration rhetoric. It’s also not a coincidence that these technologies are being used to exclude people not wanted by an institution; biometrics and facial recognition have been connected to anti-immigration policies, supported by both Republican and Democratic administrations, going back to the 1990’s.

Without having to say so directly, test proctoring companies are communicating firstly, that non-traditional students, students of color, international students, and students typically excluded from higher education are threats because they are more likely to cheat and need to be held accountable, and secondly, that additional surveillance technology (which they will sell you) would protect your institution from them.

The Pedagogy of Punishment

Algorithmic proctoring companies are the logical fulfillment of higher education’s proclivity for disciplinary practices applied to academic integrity in an online environment. Borrowing from Henry A. Giroux, Kevin Seeber describes the pedagogy of punishment and some of its consequences in regards to higher education’s approach to plagiarism in his book chapter “The Failed Pedagogy of Punishment: Moving Discussions of Plagiarism beyond Detection and Discipline.” The pedagogy of punishment ignores that what constitutes cheating, plagiarism, and citation are culturally constructed, seemingly arbitrary on first approach, and a source of anxiety for incoming students, especially those not acculturated to higher education. When introducing new students to academic conduct policies, we create an environment based on threats and fear, communicate to them that they aren’t trustworthy, and that if they break the rules, they will incur severe discipline. We’ve built up increasingly sophisticated surveillance methods for detecting when students cheat but fail to communicate to them the contextual, political, and historical forces that created our academic practices for citation, evaluation, and testing.

Sean Michael Morris and Jesse Stommel’s ongoing critique of Turnitin, a plagiarism detection software, outlines exactly how this logic operates in ed-tech and higher education: 1) don’t trust students, 2) surveil them, 3) ignore the complexity of writing and citation, and 4) monetize the data. That last point applies to test proctoring companies as well, but instead of stealing the intellectual property of students, these companies are monetizing data about students’ bodies to increase the value of their own intellectual property: their algorithms and software. As a business model, this is an ideal scenario for the private sector. Colleges and universities require students to let companies record their bodies and collect biometric data, which these companies then use to refine their product and sell it back to universities. In some cases, institutions pass the cost of using the technology to the students who then pay proctoring companies directly, averaging about $25 per test. There isn’t a clearer example of surveillance capitalism in education.

Technological Solutionism

Cheating is not a technological problem, but a social and pedagogical problem. Technology is often blamed for creating the conditions in which cheating proliferates and is then offered as the solution to the problem it created; both claims are false. Cheating predates the internet and will not be solved by a tool, a product, or an algorithm, even when that cheating happens online. Our habit of believing that technology will solve pedagogical problems is endemic to narratives produced by the ed-tech community and, as Audrey Watters writes, is tied to the Silicon Valley culture that often funds it. Scholars have been dismantling the narrative of technological solutionism and neutrality for some time now. In her book “Algorithms of Oppression,” Safiya Umoja Noble demonstrates how the algorithms that are responsible for Google Search amplify and “reinforce oppressive social relationships and enact new modes of racial profiling.” Her body of work includes authoritative critiques of algorithmic bias, technological redlining, and how racism and sexism pervade technology and online culture. Another scholar at the forefront of this conversation is Anna Lauren Hoffmann, who coined the term “data violence” to describe the impact harmful technological systems have on people and how these systems retain the appearance of objectivity despite the disproportionate harm they inflict on marginalized communities. Algorithmic discrimination and data violence can sometimes be more difficult to call out than traditional forms of discrimination and violence, not just because the data and code are kept in a black box of intellectual property, but because people are less likely to believe that data and code are even capable of discrimination and violence in the first place. Lastly, Ruha Benjamin has been developing an abolitionist toolkit using race critical code studies to not only cut through technological solutionist propaganda, but deconstruct the white supremacy that underpins what she coins the “New Jim Code.”

The Eugenic Gaze

Algorithmic test proctoring encodes ideal student bodies and behaviors and penalizes deviations from that ideal by marking them as suspicious, which threatens students with academic misconduct investigations and exclusion from the educational community. This system of measuring bodies and behaviors, associating certain bodies and behaviors with desirability and others with inferiority, engages in what Lennard J. Davis calls the Eugenic Gaze. To understand this, let’s break down the terms “Eugenic” and “Gaze.” Eugenics is an ideology with the goal of improving the genetic quality of humans through the erasure of undesirable traits. While most eugenics programs focus on race, they often expand their list of undesirable traits which have included, “...(1) the feeble-minded; (2) paupers; (3) alcoholics; (4) criminals…; (5) epileptics; (6) the insane; (7) the constitutionally weak; (8) those with specific diseases; (9) the deformed; and (10) the deaf, blind, and mute…” Eugenics programs attempt to remove people who have “undesirable” traits through anti-immigration policies, selective breeding programs, marriage restrictions, forced sterilization, murder, and genocide.

Higher education is deeply complicit in the eugenics movement. Nazism borrowed many of its ideas about racial purity from the American school of eugenics, and universities were instrumental in supporting eugenics research by publishing copious literature on it, establishing endowed professorships, institutes, and scholarly societies that spearheaded eugenic research and propaganda. Those researchers (and often university presidents) went on to promote federal policies that supported eugenics goals in areas as far reaching as immigration, economics, housing, law, and medicine. Roughly 70,000 Americans were forcibly sterilized as a direct result of these policies.

A Gaze, like the Male Gaze or the White Gaze, is a culturally dominant perspective that seeks to create a power difference between a dominant and nondominant group of people by defining the terms through which they are seen, valued, and discussed. Gazes usually share similar features such as unequal power dynamics, surveillance, control, and conformity. The Eugenic Gaze seeks to measure people’s bodies and behaviors, compare them to an idealized norm, and either reform people who don’t fit that norm through punishment or exclude them from the community altogether. Algorithmic test proctoring uses the Eugenic Gaze by measuring student’s bodies and behavior (machine learning and facial recognition software), defining what bodies and behaviors are associated with the ideal student (cisgender, white, able-bodied, neurotypical, male, non-parent, non-caretaker, etc.), attempts to reform students who deviate from the ideal student (flagging them as suspicious), or exclude them from the community (academic misconduct investigations which can lead to expulsion). The Eugenic Gaze is a combination of white supremacy, sexism, ableism, cis/heteronormativity, and xenophobia. When we apply the Eugenic Gaze using technology, the way we do with algorithmic test proctoring, we’re able to codify and reinforce all of those oppressive systems while avoiding equity-based critiques because of our belief in the neutrality of data and technology.

What Do We Do Now?

Don’t use algorithmic test proctoring. Instead, focus on pedagogical techniques that you can use to design assessments, online or in person, that draw from personal experience or require students to apply concepts in unique contexts. If you have to use algorithmic test proctoring, make sure students know about the test settings and ID requirement well before they take a test, and assure them that you will not take any behavior flagged as “suspicious” into consideration that isn’t described explicitly in the syllabus. Talk with students about academic integrity, not just about the rules and consequences, but the culture that constructed it and how surveillance capitalism and privacy play a role. If students are uncomfortable with algorithmic test proctoring, support and empower them to communicate this to the administration and, where possible, give them the ability to opt-out. Advocate on behalf of students; start a conversation at your institution about what this technology communicates to students who are forced to use it, what values it represents, and how those may be different from the stated values of the institution. Lastly, read Safiya Umoja Noble, Anna Lauren Hoffmann, Ruha Benjamin, Audrey Watters, and Os Keyes. Each of these scholars offers important analyses and critiques of technology, but also a vision for how it can be used towards justice and care; they’ve helped me understand and continue to give me hope.

Conclusion

Algorithmic test proctoring is a collection of machine learning algorithms that reinforce oppressive social relationships and inflict a form of data violence upon students. It encodes a “normal” body as cisgender, white, able-bodied, neurotypical, and male. It surveils students and disciplines anyone who doesn’t conform to “normal” through a series of protocols and policies that participate in a pedagogy of punishment, ultimately risking students’ academic career and psychological, emotional, and physical safety. Companies that build these technologies are able to exploit higher education’s proclivity for discrimination because academia is still afraid of letting the wrong people in. Technology isn’t neutral or objective, it didn’t cause cheating, and it won’t ultimately stop it. It is, however, able to encode and amplify discriminatory beliefs and cast them into invisible and powerful systems that can harmfully impact our choices and our bodies.

Cathy O’Neil writes:

Big Data processes codify the past. They do not invent the future. Doing that requires moral imagination, and that’s something only humans can provide. We have to explicitly embed better values into our algorithms, creating Big Data models that can follow our ethical lead. Sometimes that will mean putting fairness ahead of profit.

Collectively, higher education has failed to embed ethical values into educational technology. Algorithmic test proctoring, and many technologies like it, sacrifice student agency in favor of discriminatory exclusion, the pedagogy of punishment, surveillance capitalism, technological solutionism, and the Eugenic Gaze. Educators have an obligation to object, resist, and subvert these systems, to push towards a practice that embodies justice, liberation, and love, and to remain vigilant for the next technological “solution” that promises to “fix” students or education.