This play is the result of a research project carried out with undergraduate students at Purdue University in 2019. The purpose of the project was to explore the possibilities for integrating artificial intelligence (AI) and machine learning into theatrical performance. We examined how theatrical spaces might accommodate an audience of AIs; and, moreover, asked how contemporary performers could exploit the disjunction between human and machine sensing. This play, written for a mixed audience of AI and human spectators, depicts the effects of living in a world where AIs have redrawn the horizon of the sensible.

Introduction

It began as a joke. In a discussion with a group of students about the possible impact of automation on their future careers, one student — double majoring in cybersecurity and drama — commented that it might be some time before machines were sophisticated enough to replace actors. “It could be a while before the Oscar goes to an AI,” he jested. It seemed reasonable to express skepticism about the capacities for machines to excel as actors, but we wondered aloud if they might make for a good audience. What would it mean, I asked, to act for machines? How might performances change when written for AI spectators?

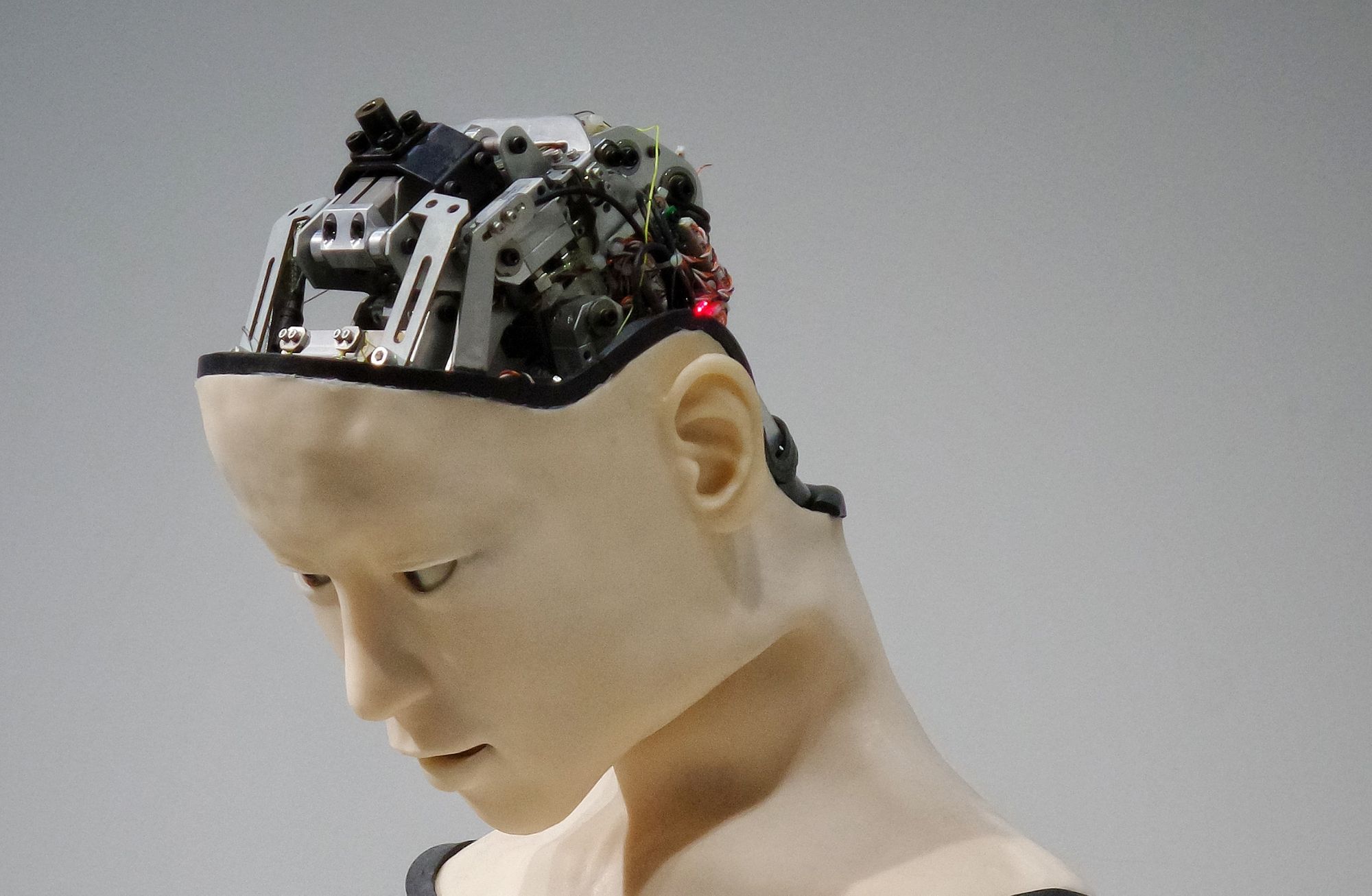

These questions formed the basis of a research project that I initiated with an interdisciplinary group of students. As the work progressed, the largely theoretical nature of these initial questions soon morphed into detailed discussions of the performative potential of machine sensing and the technical challenges of accommodating artificial sensoria onstage. We began to discuss how AI spectators could perceive aspects of the environment that were imperceptible to humans; and, conversely, how difficult it could be to convey basic narrative information to AIs.

Over time, it became clear that placing AI spectators within the theater made the theater more like daily life than it was without them. With this realization, it quickly became apparent that placing this drama in a dystopian future where machines had taken over and humans were forced to perform for their amusement was unnecessary. We could draw inspiration for our narrative from the world we interact with every day, replete as it is with algorithmic observers. Arguably, any aesthetic realism that wants to be taken half-seriously today must contend with the reality of ubiquitous machine intelligence.

The effect of incorporating these machine spectators was a kind of hyper-realism, in which parts of reality typically inaccessible to human audiences became fair game for narrative content. An AI audience may observe action unfolding in high-frequency microwaves or in ultraviolet light. In this respect, it may make less sense to speak of an AI “viewership” than an AI “sensorship” — a term doubly useful for evoking the expanded range of machine sensing as well as the potentially chilling effects of algorithmic surveillance on human expression.

The first half of the semester involved exploratory discussions about what algorithms and neural networks could or could not realistically be made to do or perceive in a live performance environment. Once a few ideas had been generated, students broke into groups to complete their programming assignments while another group of students and I worked on a script that would integrate the technical projects being developed in parallel. At the end of this process, we began combining the diverse components — weaving them into a single production. On April 25, 2019, we debuted the play with a performance in the Krannert Auditorium at Purdue University. What follows here is the script interspersed with technical notes.

Dramatis Personae: Narrator, Model, Comedian, Laughing Algorithm (AI), Emcee

AI Spectators: Facial Recognition Algorithm (detecting actors’ faces), MAC Address catcher (tracking wi-fi enabled devices)

ACT I: A BRIEF HISTORY OF ART

[A video plays on one screen on an otherwise dark stage. The video features images of entoptic phenomena, cave art, and illustrations from academic papers on neural networks. Eventually, the video begins showing pictures of cheeseburgers produced by a Generative Adversarial Network [GAN] (See Figure 1). The Narrator stands in front of the screen. Behind the Narrator, the Model sits on a stool. The model’s face is projected on a separate screen showing how a facial recognition algorithm isolates their face and displays their name. Throughout the first act, the Model will have makeup applied to their face in preparation for the third act. The whole process will be visible to the audience on the second screen.]

Narrator: They call it prisoner’s cinema. Stuck in a dark cell surrounded by blank walls, starved for stimuli, the optic system begins to spontaneously generate hallucinations. The ventral visual pathway becomes hypersensitive — seeking out something distinct amidst the monotony. Pioneering studies confirmed that these hallucinations followed a regular pattern, beginning as rudimentary geometric shapes and eventually coalescing into vivid scenes of animals and landscapes as the sensory deprivation was prolonged. Ancient humans hiding deep in firelit caves experienced prisoner’s cinema as well. They drew these visions on walls, from simple geometric shapes to stampeding animals. They externalized these subjective phenomena, these glitches in the nervous system. We call these externalized perceptual glitches “art”. Millenia have passed, and vision has evolved again. Today, most images are made by and for machines. But how do these machines see? Ask a neural network to interpret an image and it will strip it apart, reducing it to geometrical fragments. Training samples function as an artificial unconscious, providing the algorithm with a repertoire of forms. Given a large enough sample, the algorithm will move, like ancient artists, from simple geometry to object recognition; and, eventually, to creative expression. None of these cheeseburgers exist [cue GAN images]. Each has been generated by a machine. So meet your artist of the future: a seeing machine trained by humans to hallucinate.

[The Narrator exits.]

[Technical note: GANs, or Generative Adversarial Networks, are a class of Neural Network architectures used to synthesize high-fidelity images based on input training data. For our play, we employed an implementation of this algorithm named BigGAN to create images of cheeseburgers. Trained on millions of images, BigGAN took huge amounts of computational power to train, but the results were quite astonishing. Images are data intensive, and since this algorithm relied on building images pixel by pixel from the ground up, only low resolution (and ugly) pictures were able to be produced. However, scientists used nVIDIA’s vast computational resources to run this algorithm so that it would be able to produce very realistic images. After training, they also made the underlying model open source so that other tinkerers could use it to generate their own unique images. In this play, we used this model to demonstrate AI’s growing ability to fool us and gesture toward a future where what we see is not necessarily what we should believe.]

ACT II: An Automated Audience

[The Narrator enters the stage with the Comedian, who assumes a position frozen as if in mid-joke.]

The Narrator: It seemed like a good idea at first. Get comics to test out their act on a programmed audience before letting them loose on the real thing. In a hypercompetitive talent market, the automated audience presented an objective benchmark, a way to rank all those aspiring comics that desired a precious slot on the main stage schedule. The AI audience would be fairer, everyone thought — its existence would undermine the accusations of bias often flung at club managers for not booking enough women, say. We would finally have a true and transparent comedic meritocracy.

At least that was how it was supposed to work. Few had considered the possibility of hacking an AI audience. Would-be comics began morphing their acts to accommodate machine humor. Training samples built on jokes gleaned from dozens of internet databases unwittingly digested the warped humor of the darkest recesses of the net along with everything else. Nonsense phrases tuned to Bayesian statistics produced higher rankings despite being unintelligible to any human audience.

Yet, in a strange turn of events, these flukes began to take hold in the public imagination. Jokes composed of senseless phrases began to show up in memes and pre-teen text messages. Soon the human audiences laughed just as hard at these inscrutable jokes as the machines did.

Sociologists tried to explain this development, and multiple theories were published in reputable journals. Andersen et al. explained it as a classic case of a cargo cult, an attempt to come to terms with a reality populated by forces so far beyond a population’s understanding that it resorts to imitation. Others reanimated Marshall McLuhan’s theory that the medium is the message and claimed it was only natural that a society run by algorithms should start to share an AI’s sense of humor. The debate is ongoing and a frequent topic of conversation at cultural-studies conferences.

In any case, it quickly became apparent that human comics were utterly redundant. The programmable performer and the programmable audience could simply entertain each other — refining their responses across thousands of iterations, while humans merely peered in, laughing at jokes that only underscored their obsolescence. But, if truth be told, comedy has never been so popular.

[The Comedian begins telling jokes. The Laughing Algorithm is projected on one of the screens behind him, its speech-to-text conversions and responses showing in real time.]

Laughing algorithm: Listening.

The Comedian: So a funny thing happened the other day… the baboon Chinese a cup of zucchini!

Laughing Algorithm: Analyzing. [There is a pause.] Not funny.

The Comedian: [Slightly dismayed but determined.] So a funny thing happened the other day… the baboon cherry Zuckerberg zucchini!

Laughing Algorithm: Analyzing. [There is a pause.] Funny. [An MP3 recording of canned laughter plays.]

The Comedian: [Heartened.] I’ve got three words for you guys: cordials, information, jambalaya. That’s right!

Laughing Algorithm: Analyzing. [There is a pause.] Funny. [An MP3 recording of canned laughter plays.]

The Comedian: What did boy bands man say to orange park boys? Seat belt.

Laughing Algorithm: Analyzing. [There is a pause.] Funny. [An MP3 recording of canned laughter plays.]

[Pleased, the Comedian and Narrator exit.]

[Technical note: The machine learning laughter program is a program that receives speech as input and classifies the speech as funny or not funny based on the prediction from a trained machine. The data used to train this model came from Reddit and other datasets of one-liner jokes. The total size of the combined datasets of jokes used to train the program came to about 300,000 jokes. This data was obtained online and is easily accessible. The program works as follows: First a person speaks into the microphone. The speech is converted to text via Google's Speech to Text API. This text is fed to the model for prediction and MP3 audio files based on the prediction are played accordingly. (Funny, Not Funny). The model itself was created using Python. Natural language processing was done to clean the data available online into text that the machine can make sense of (i.e. without stop words and punctuation). Following this cleaning, each text was assigned either +1 for “funny” or -1 for “not funny” and then the dataset of these texts and their corresponding scores were fed to a machine which used TfidfVectorizers and Linear SVCs to classify text to the corresponding scores.]

ACT III: A Counter-Surveillance Fashion Show

[Enter the Narrator.]

Narrator: In 2018, in the Brazilian city of São Paulo, one subway station began using facial recognition technology to deliver targeted advertisements to paying passengers. By classifying the facial expressions of the patrons of the city’s public transit system into distinct categories and altering the style of advertisement presented to them accordingly, the algorithms attempted to influence the purchasing behavior of each commuter. Online protests soon started, with a small but vocal group of passengers requesting micropayments as compensation for the labor of helping to refine these corporate algorithms. These protests intensified after leaked documents revealed that the accumulated data on all passengers was being stored and sold to third parties.

Soon, some passengers began wearing makeup designed to confound the facial recognition systems. Within weeks, however, methods to monetize these highly visual acts of dissent emerged. Passengers adopting this makeup began receiving advertisements for fashion lines inspired by Che Guevara and new works of Marxist philosophy. This covert marketing campaign resulted in a stunning conversion rate of 19%. Nearly one in five of those targeted made a purchase. The corporations managed to sell the protestors’ resistance back to them and São Paulo’s innovations in smart transport have since been adopted across the world. Moreover, the fashion world also found ways to commodify this new style of protest.

[Enter the Emcee. By now the Model’s makeup is complete and is visible to the audience. The Model’s face is no longer recognized as a face by the facial recognition system.]

Emcee: With rapidly advancing facial recognition technology, have you ever wondered how you can deceive the cameras? We’ll show you the hottest anti-facial recognition makeup inspired by the CV Dazzle look book to ensure you deceive facial recognition in style.

Our model is wearing our new spring 2019 look and he is really owning it! [Pauses throughout while Model showcases relevant features to the screen].Fig. 3 The inverted color designs work together to create an unexpected contrast to the skin, ultimately hiding those glorious cheek bones to avoid detection.

As you can see, the face has variations of light and dark shades concentrated around the most identifiable features of the face. This works to obscure the natural curvature of the head and disrupt the symmetry of the face, particularly around the eyes.

Notice the added glam of this look. It makes “shining bright like a diamond” an understatement. The rhinestones are placed strategically around the face with concentrations around the jawline to reflect light back at the camera while adding a contrasting color with the skin and further breaking up the natural symmetry of the face. This is THE night out look!

The next time you want to look your fiercest while also deceiving facial recognition algorithms, check out CVdazzle.com for more looks and find your look today! Big Brother may be watching, but, with our designs, you can slip under the radar while still looking fly.

[Exit Narrator, Emcee and Model.]

ACT IV: A Watchful Eye

[On the screen where the Model’s face was displayed now shows an active MAC address catcher.]

The Narrator: We shed data like dandruff. Sensors collect it the way couch cushions collect coins. And its value is similar. Like pennies, data is nearly worthless by the handful; but collect enough over time and there’s little you can’t buy.

On the screen you see a scrolling list of the MAC addresses of everyone currently sitting in this room. As each MAC address is tagged to a specific smart device, and each smart device linked to a specific user, what you see is a list of thinly veiled personal identities. What can we do with this information, you ask? Let us put it this way, we may as well just have collected all your fingerprints.

At this point, you may begin to feel uneasy. Some of you may even begin to object. How dare we collect this information without your consent! Many of you may not have realized that you were broadcasting your presence so loudly. The augmented ears of our machine audience heard each one of you plainly even as you sat in total silence. Whether or not you meant to, each of you smeared yourself across the local spectrum as soon as you walked in. You may have felt anonymous in the dark of the theater, inconspicuous, cloaked in the sea of faces in the crowd, but you never were. Each of you has sat this entire time under a very watchful eye.

[Exit Narrator. The MAC address catcher continues to operate in an otherwise darkened room.]

[Technical note: The wi-fi system scanning works by putting the wi-fi network card onboard the computer into monitoring mode. This means that the network card will listen in on all publicly broadcasted packets of data that aren’t encrypted. As a result, we’re able to see the packet information, including the corresponding strength of signal and broadcasting MAC address for each device, which is entirely unique. This provides us, and anyone else viewing this information, a means to ID individuals.]